Thinking Big Data for commerce

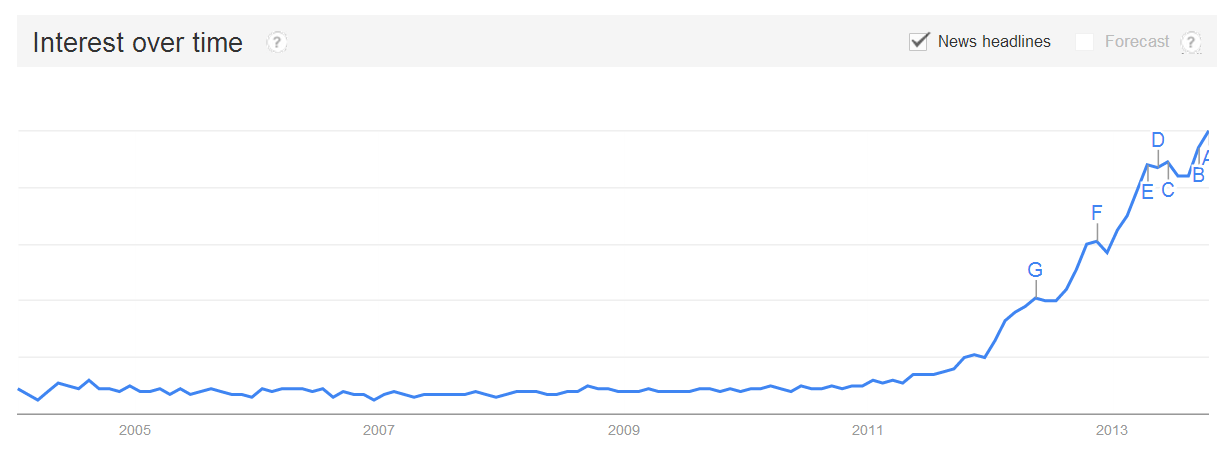

As of October 2013, Google Trends indicates that the buzz around Big Data is still growing. Based on my observations of many services company (mostly retailers though), I believe that it’s not all hype, and that indeed Big Data is going to deeply transform those businesses. However, I also believe that Big Data is wildly misunderstood by most, and that most Big Data vendors should not be trusted.

Search volume on the term “Big Data” as reported by Google Trends

Search volume on the term “Big Data” as reported by Google Trends

A mechanization of the mind based on data

Big Data, like many technological viewpoint, is both quite new, and very ancient. It’s a movement deeply rooted in the perspective of mechanization of the human mind which started decades ago. The Big Data viewpoint states the data can be used as the source of knowledge to produce automated decisions.

This viewpoint is very different from Business Intelligence or Data Mining, because humans get kicked out of the loop altogether. Indeed, producing millions of numbers a day cost almost nothing; however, if you need people to read those numbers, the costs are fantastic. Big Data is mechanization : the number produced are decision and nobody is needed to interfere at the lowest level to get things done.

The archetype of the Big Data application is the spam filter. First, it’s an ambient software, taking important decisions all the time: deciding for me which message is not worth my time to read certainly is an important decision. Second, it has has been built based on the analysis of lot of data, mostly by establishing databases of messages labeled as spam or not-spam. Finally, it requires almost zero contribution of its end-user to deliver its benefits.

Not every decision is eligible to Big Data

Decisions that are eligible to a Big Data processing are everywhere:

- Choosing the next most profitable prospect to send the paper catalog.

- Choosing the quantity of goods to replenish from the supplier.

- Choosing the price of an item in a given store.

Yet, such a decision needs two key ingredients:

- A large number of very similar decisions are taken all the time.

- Relevant data exist to bootstrap an automated decision process.

Without No1, it’s not cost-efficient to even tackle the problem from a Big Data viewpoint, Business Intelligence is better suited. Without No2, it’s only rule-based automation.

Choosing the problem first

The worst mistake that a company can do when starting a Big Data project consists of choosing the solution before choosing the problem. As of fall 2013, Hadoop is now the worst offender. I am not saying that there is anything wrong with Hadoop per se; however choosing Hadoop without even checking that it’s a relevant solution is a costly mistake. My own experience with service companies (commerce, hospitality, healthcare …) indicates that, for those companies, you hardly ever need any kind of distributed framework.

The one thing you need to start a Big Data project is a business problem that would be highly profitable to solve:

- Suffering from too high or too low stock levels.

- Not offering the right deal to the right person.

- Not having the right price for the right location.

The ROI is driven by the manpower saved by the automation, and by getting better decisions. Better decisions can be achieved because the machine can be made smarter than a human with less than 5s of brain-time per individual decision. Indeed, in service companies, productivity targets imply that decisions have to be made fast.

Your average supermarket has typically about 20,000 references, that is, 330 hours of work if employees spend 1 minute per reordered quantity. In practice, when reorders are made manually, employees can only afford a few seconds per reference.

Employees are not going to like Big Data

Big Data is a mechanization process. And, it’s usually easy to tell to spot the real thing just by looking at internal changes caused by the project. Big Data is not BI (Business Intelligence) where employees/managers are offered a new gizmo, changing nothing to the status quo. The first effect of Big Data project is typically to reduce the number of employees (sometimes massively) required to process a certain type of decisions.

Don’t expect teams soon-to-replaced-by-machines to be overjoyed by such a perspective. At best, upper management can expect passive resistance from their organization. Across dozens of companies, I don’t think I have observed, among services companies, any Big Data project succeeding without a direct involvement from the CEO herself. That’s unfortunately the steepest cost of Big Data.

The roots of Big Data

The concept of Big Data did emerge only in 2012 among mainstream media, but its roots are much older. Big Data results from 3 vectors of innovations:

- Better computing infrastructures to move data around, to process data, to store data. The latest instance of this trend is cloud computing.

- Algorithms and statistics. Over the last 15 years, the domain of statistical learning has exploded (driverless cars are a testimonial of that).

- Enterprise digitalization. Most (large) companies have completed their digitalization process where each key business operation has its counterpart digital record.

Big Data is first a result of the mix of those 3 ingredients.

Big Data = Big Budget?

Enterprise vendors are now chanting their new motto big data = big budget, and you can’t afford not to take the Big Data train, right? Hadoop, SAP Hana, Oracle Exalytics, to name a few, are all going to cost you a small fortune when looking at TCO (total cost of ownership).

For example, when I ask my clients how much does it cost to store 24TB of data on disk? Most of the answers come above 10,000€ per month. Well, OVH (hosting company) is offering 24TB servers from 110€ / month. Granted, this is not highly redundant storage, but at this price, you can afford a few spares.

Then, the situation is looks even more absurd when one realizes that storing 1 year of receipts of Walmart - the largest retailer world-wide - can fit on a USB key. Unless you want to process images or videos,very few datasets cannot be made to fit on a USB key.

Here, there is a media-induced problem: there are about 50 companies world-wide who have web-scale computing requirements such as Google, Microsoft, Facebook, Apple, Amazon to name a few. Most Big Data frameworks originate from those companies: Hadoop comes from Yahoo, Cassandra comes from Facebook, Storm is now Twitter, etc. However, all those companies have in common of roughly processing about 1000x more data that the largest retailers.

The primary cost of a Big Data project is the focus that top management needs to invest. Indeed, focus comes with a strong opportunity cost: while the CEO is busy thinking how to transform her company with Big Data, fewer decisions can be made on other pressing matters.

Iterations and productivity

A big data solution does not survive its first contact with data.

Big Data is a very iterative process. First, the qualification of data (see below) is an extremely iterative process. Second, tuning the logic to obtain acceptable results (the quality of the decisions) is also iterative. Third, tuning the performance of the system is, again, iterative. Expecting a success at first try is heading for failure, unless you tackle a commoditized problem, like spam filtering, with a commoditized solution, like Akismet.

Since many iterations are unavoidable, the cost of the Big Data project strongly impacted by the productivity of the people executing the project.In my experience at teaching distributed computing at the Computer Science Department Ecole normale supérieure), if the data can kept on a regular 1000€ workstation, the productivity of any developer is tenfold higher compared to a situation where the data has to be distributed - no matter how many frameworks and toolkits are thrown at the problem.

That’s why it’s critical to keep things lean and simple as long it’s possible. Doing so gives your companies an edge against all your competitors who will tar pit themselves with Big Stuff.

Premature optimization is the root of all evil. Donald Knuth 1974

Qualifying the data is hard

The most widely estimated challenge is Big Data is the qualification of the data. Enterprise data has neverbeen created with the intent to feed some statistical decision-taking processes. Data exist only as the by-product of software operating the company. Thus, enterprise data (nearly) always full of subtle artifacts that need to be carefully addressed or mitigated.

The primary purpose of point of sales is to let people pay; NOT to produce historical sales records. If a barcode has become unreadable, then, many cashiers might just scan twice another item that happens to have the same price than the non-scannable one. Such a practice is horrifying from a data analysis perspective, but looking at the primary goal (i.e. letting people pay), it’s not unreasonable business-wise.

One of the most frequent antipatterns observed in Big Data projects is the lack of envolvement of the non-IT teams with all the technicalities involved. This is a critical mistake. Non-IT teams need to tackle hands-on data problems because solutions only come from a deep understanding of the business.

Conclusion

Big Data is too important to be discarded as an IT problem, it’s first and foremost a business modernization challenge.