O/C mapper for TableStorage

The Table Service API is the most subtle service provided among the cloud storage services offered by Windows Azure (with also include Blob and Queue Series for now). I did struggle a while to eventually figure out what was the unique specificity of Table Storage from a scalability perspective or rather from a cost-to-scale perspective as the cloud charges you according to your consumption.

The Table Service API is the most subtle service provided among the cloud storage services offered by Windows Azure (with also include Blob and Queue Series for now). I did struggle a while to eventually figure out what was the unique specificity of Table Storage from a scalability perspective or rather from a cost-to-scale perspective as the cloud charges you according to your consumption.

Since the scope of the Table Storage remained a fuzzy element for me for a long time, the beta version of Lokad.Cloud does not include (yet) support for Table Storage although. Rest assured that this is definitively part of our roadmap.

TableStorage vs. others

Let’s start by identifying the specifics of TableStorage compared to other storage options:

- Compared to Blob Storage,

- Table Storage provides a much cheaper fine-grained access to individual bits of information. In terms of I/O costs, Table Storage is up to 100x cheaper than Blob Storage through Entity Group Transaction.

- Table Storage will (in a near feature) provides secondary indexes while the Blob Storage only provide 1 single hierarchical access to blobs.

- Compared to SQL Azure,

- Table Storage lacks about everything you would expect from a relational database. You cannot perform any Join operation or establish a Foreign key relationship and this is very unlikely to be ever available.

- yet, while SQL Azure is limited to 10GB (this value might increase in the future, this is really not the way to go), Table Storage is expected to be nearly infinitely scalable for its own limited set of operations.

The StorageClient library shipped with Azure SDK is nice as it provides a first layer of abstraction against the raw REST API. Nevertheless, coding your app directly against the ADO.NET client library seems painful due to the many implementation contraints that comes with the REST API. Further separation of concerns is needed here.

The Fluent NHibernate inspiration

TableStorage has way much less expressivity than relational databases, nonetheless, classical O/R mappers are great source of inspiration, especially nicely designed ones such as NHibernate and its must-have addon Fluent NHibernate.

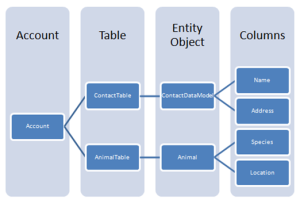

Although, the mapping entity-to-object isn’t that complex in the case of TableStorage, I firmly believe that a proper mapping abstraction ala Fluent NH could considerably ease the implementation of cloud apps.

Among key scenarios that I would like to see addressed by Lokad.Cloud:

- A seamless management of large entity batches when no atomicity is involved: let’s say you want to update 1M entities in your Table Storage. Entity Group can actually reduce I/O costs by 100x. Yet, Entity Group comes with various constraints such as no more than 100 entities per batch, no more than 4MB by operation, … Fine-tuning I/O from the client app would have to be replicated for every table, it really makes sense to abstract that away.

- A seamless overflowing management toward the Blob Storage. Indeed, Lokad.Cloud already natively push overflowing queued items toward the Blob Storage. In particular, Table Storage assume than no properties should weight more than 64kb, but manually handling the overflow from the client app seems very tedious (actually a similar feature is already considered for blogs in SQL Azure).

- A more customizable mapping from .NET type to native property types. The amount of property types supported by the Table Storage is very limited. Although a few more types might be added in the future, Table Storage won’t (ever?) be handling native .NET type. Yet, if you have a serializer at hand, problem is no more.

- A better versioning management as .NET properties may or may not match the entity properties. Fluent NH has an exemplary approach here: by default, match with default rule, otherwise override matching. In particular, I do not want the .NET client code to be carved in stone because of some legacy entity that lies in my Table Storage.

- Entity access has to be made through indexed properties (ok, for now, there isn’t many). With the native ADO.NET, it’s easy to write Linq queries that give a false sense of efficiency as if entities can be accessed and filtered against any property. Yet, as data grow, performance is expected to be abysmal (beware of timeouts) unless entities are accessed through their indexes. If data is not expected to grow, then you go for SQL Azure instead, as it’s way more convenient anyway.

Any further aspects that should be managed by the O/C mapper? Any suggestion? I will be coming back soon with some more implementation details.