Fat entities for Table Storage in Lokad.Cloud

After realizing the value of the Table Storage, giving a lot of thoughts about higher level abstractions, and stumbling upon a lot of gotcha, I have finally end-up with what I believe to be a decent abstraction for the Table Storage.

After realizing the value of the Table Storage, giving a lot of thoughts about higher level abstractions, and stumbling upon a lot of gotcha, I have finally end-up with what I believe to be a decent abstraction for the Table Storage.

The purpose of this post is to outline the strategy adopted for this abstraction which is now part of Lokad.Cloud.

Table Storage (TS) comes with an ADO.NET provider part of the StorageClient library. Although I think that TS itself is a great addition to Windows Azure, frankly, I am disappointed by the quality of the table client library. It looks like an half backed prototype, far from what I typically expect from a v1.0 library produced by Microsoft.

In many ways, the TS provider now featured by Lokad.Cloud is a pile of workarounds for glitches that are found in the underlying ADO.NET implementation; but it’s also much more than that.

The primary benefit brought by TS is a much cheaper way of accessing fine grained data on the cloud, thanks to the Entity Group Transactions.

Although, secondary indexes may bring extra bonus points in the future, cheap access to fine grained data is basically the only advantage of Table Storage compared to the Blob Storage at present day.

I believe there are couple of frequent misunderstandings about Table Storage. In particular, TS is nowhere an alternative to SQL Azure. TS features nothing you would typically expect from a relational database.

TS does feature a query language (pseudo equivalent of SQL), that supposedly support querying entities against any properties. Unfortunately, for scalability purposes, TS should never be queried without specifying row keys and/or partition keys. Indeed, specifying arbitrary properties may give a false impression that it just works ; yet to perform such queries, TS has no alternative but to scan the entire storage, which means that your queries will become intractable as soon your storage grows.

Note: If your storage is not expect to grow, then don’t even bother about Table Storage, and go for SQL Azure instead. There is no point in dealing with the quirks of a NoSQL store, if you don’t need to scale in the first place.

Back to original point, TS features cheaper data access costs, and obviously this aspect had to be central in Lokad.Cloud - otherwise, it would not been worthwhile to even bother with TS in the first place.

Fat entities

To some extend, Lokad.Cloud puts aside most of the property-oriented features of TS. Indeed, query aspects of properties don’t scale anyway (except for the system ones).

Thus, the first idea of was to go for fat entities. Here is the entity class shipped in Lokad.Cloud:

public class CloudEntity < T >

{

public string RowRey { get; set; }

public string PartitionKey { get; set; }

public DateTime Timestamp { get; set; }

public T Value { get; set; }

}

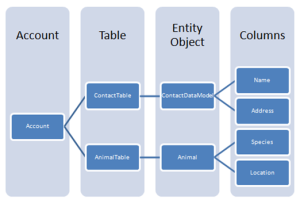

Lokad.Cloud exposes the 3 system properties of TS entities. Then, the CloudEntity class is generic and exposes a single custom property of type T.

When an entity is pushed toward TS, the entity is serialized using the usual serialization pattern applied within Lokad.Cloud.

This entity is said to be fat because the maximal size for CloudEntity in 1MB (actually it’s 960KB) which corresponds to the maximal size for an entity in TS in the first place.

Instead of going for 64KB limitation per property, Lokad.Cloud offers an implementation that come with a single 1MB limitation for the whole entity.

Note: Lokad.Cloud relies, under the hood, on a hacky implementation which involves spreading the serialized representation of the CloudEntity over 15 binary properties.

At first glance, this design appears questionable as it introduces some serialization overhead instead of relying on the native TS property mechanism. Well, a raw naked entity costs about 1KB due to its Atom representation. In fact, the serialization overhead is negligible, even for small entities; and for complex entities, our serialized representation is usually more compact anyway due to GZIP compression.

The whole point of fat entities is to remove as much friction as possible from the end-developer. Instead of worrying about tight 64KB limits for each entity, the developer has only to worry about a single and much higher limitation.

Furthermore, instead of trying to cram your logic into a dozen of supported property types, Lokad.Cloud offers full strong-typing support through serialization.

Batching everywhere

Lokad.Cloud features a table provider that abstracts the Table Storage. A couple of key methods are illustrated below.

public interface ITableStorageProvider

{

void Insert(string tableName, IEnumerable> entities);

void Delete(string tableName, string partitionKeys, IEnumerable rowKeys);

IEnumerable> Get(string tn, string pk, IEnumerable rowKeys);

}

Those methods have no limitations concerning the number of entities. Lokad.Cloud takes care of building batches of 100 entities - or less, since the group transaction should also ensures that the total request weight less than 4MB.

Note that the 4MB restriction of the TS for transactions is a very reasonable limitation (I am not criticizing this aspect) , but the client code of the cloud app is really not the right place to enforce this constraint as it significantly complicates the logic.

Then, the table provider also abstracts away all subtle retry policies that are needed while interacting with TS. For example, when posting a 4MB transaction request, there is a non-zero probability of hitting a OperationTimedOut error. In such a situation, you don’t want to just retry your transaction, because its very likely to fail again. Indeed, time-out happens when your upload speed does not match the 30s time-out of the TS. Hence, the transaction needs to be split into small batches, instead of being retried as such.

Lokad.Cloud goes through those details so that you don’t have to.

Reader Comments (5)

During the early CTPs, the team refused to call the StorageClient library an API because it hadn’t been optimized and wasn’t production quality. I haven’t done a serious comparison of the library’s released v1 and the early CTPs, but your comments indicate that the team didn’t spend a great deal of time or effort on improving the release version. Cheers, –rj.

January 16, 2010 | Roger Jennings

Roger, I believe that most of the problem related to the Table Storage ADO.NET client is the schizophrenic approach taken by MS on that one. In one hand, it’s supposed to be a high-level abstraction build upon the same OO model than the one used for SQL databases. Obviously that part is an abstraction that leaks in every single method because Table Storage is absolutely NOT a RDBMS no matter how you look at it. Then, in the other hand, it also fails at providing any convenient access to the raw Table Service API which is really frustrating. I would have really prefer MS to expose a clean REST-level implementation for the Table Slorage. The ADO.NET component would have been build on top of this library, and cloud app developers would have had the choice to go for ADO.NET or the underlying REST wrapper.

January 16, 2010 | Joannes Vermorel

Joannes, great great blog, just stumbled upon it. I like your approach to TS with fat entities, the only comment I have is that it is sometimes worhwhile to be able to map some properties as TS columns as it is easier to manage from a development perspective. Theses properties would still take part of the serialization but with the future advent of secondary indexes, will be more efficiently queryable. At the same time, it is easier to look at a Table Stores entity set in Azure Storage Explorer for instance if you do have some meaningful columns. These could of course be easily switched off in production as long as we’re not querying for it. I will take a look at your Lokad.Cloud implementation and see if we decide to take a dependency on it. If yes, I’ll give you some feedback after a few weeks of usage. I can already see some benefits from deriving from CloudEntity

and delegating some values from Value as r/o properties that are then mapped to TS columns. I wish you and Lokad the best, Gabor. February 11, 2010 | Gabor Ratky

Hello Gabor, thanks you very much for the nice feedback. You are correct about the idea of mapping “some” properties, especially considering that there will be secondary indexes in the future. Yet, the spec for secondary indices is still unknown. Hence, instead of making wild guesses that would be likely to be wrong later on, I am taking a minimalistic approach for FatEntities, that will evolve in due time when secondary indices become available.

February 12, 2010 | Joannes Vermorel

Hopefully secondary indices are around the corner. Amazon Web Services' SimpleDB automatically creates indices for column data and as a result, their table implementation is very usable.

April 22, 2010 | Shaun Tonstad